This blog post is part of an ongoing effort to find the best open source tool for Triangulated Irregular Networks (TINs).

Nathan Saylor recommended me to try SAGA GIS, which has various tools for TINs. I've never used SAGA before (here is a quickstart tutorial), and the first challenge was to get it running on my MacBook. The easiest option was to use Homebrew:

Do you want to contribute to this tutorial? Please add your comments below!

Nathan Saylor recommended me to try SAGA GIS, which has various tools for TINs. I've never used SAGA before (here is a quickstart tutorial), and the first challenge was to get it running on my MacBook. The easiest option was to use Homebrew:

brew tap osgeo/osgeo4mac

brew install saga-gis --with-app

I first converted my DEM into a grid format supported by SAGA, and also reduced the resolution from 10m to 100m to avoid out-of-memory issues on my laptop:

gdal_translate -of GSBG -outsize 600 600 jotunheimen.tif jotunheimen_100m.grd

My SAGA installation is a bit rusty. I need to open the dataset twice ("File > Grid > Load" or by using the File System tree) before it actually loads.

You'll see your dataset in the Data Manager. Above the datasets your see some numbers:

100; 600x 600y; 432050x 6790050y

The first number is the cell size (100 meters), the next two numbers show the number of cells in x and y direction (600 x 600 px), and the last two numbers are the origin of the grid (my dataset is in UTM 32N).

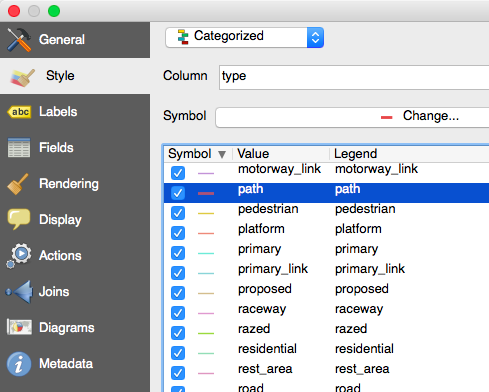

You'll find the TIN tools under Geoprocessing > TIN > Conversion:

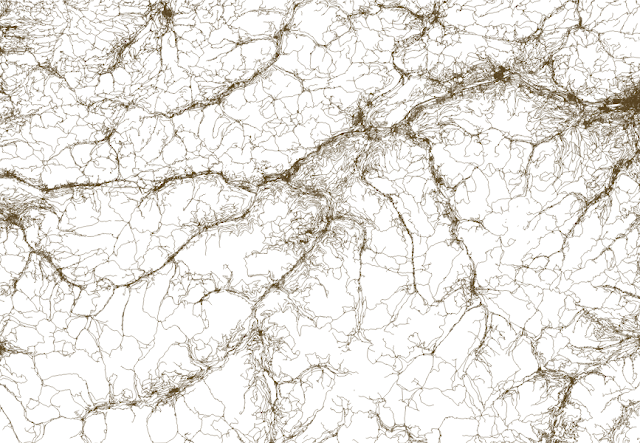

Unfortunately, the tools are poorly documented, so we need to experiment a bit. When I tried "Grid to TIN" I got (599 x 599 * 2 = 717,602 triangles for my 600 x 600 = 360,000 pixels, which is not very efficient. This is similar to the technique I used to create a triangle mesh in three.js.

We need to to better, and "Grid to TIN (Surface Specific Points)" gives you some more options:

I'm not sure how the different methods are working, and if they can be combined by setting different thresholds below. Please give me some hints if you know some theory and best practices for terrains like this.

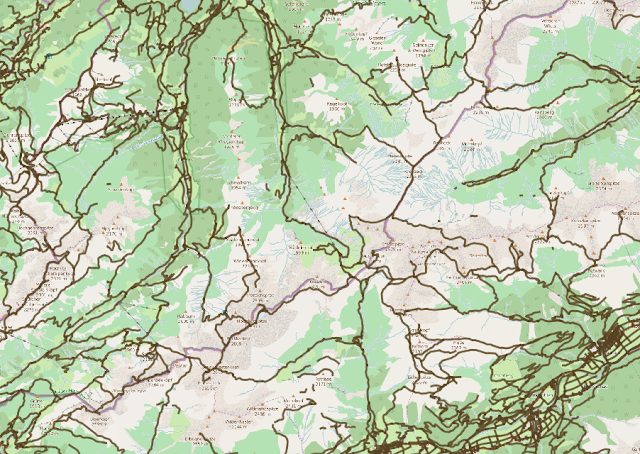

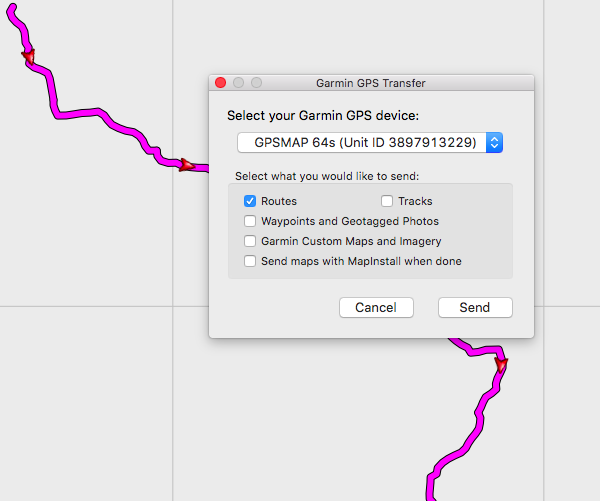

I first used the defaults (Opposite Neighbours). I'm not friends yet with the map/3D-viewer of SAGA, so I'm exporting my TIN so I can enjoy it in other applications. The export tool is hidden under Geoprocessing > File -> Shapes -> Exports -> Export TIN to Stereo Lithography File (STL).

I then opened the file in MeshLab:

Ok, it's promising, you can clearly see the big triangles for lakes, and smaller triangles when the terrain is rough. I got 172,128 triangles, about one-forth of a regular mesh. But it's not good enough, as I see that parts of the lakes are not horizontal. Which settings should I use to fix it?

Still needs investigation:

- Is it possible to run TIN functions from SAGA on the command line? Does SAGA CMD work on Mac?

- How big dataset can SAGA handle?

Do you want to contribute to this tutorial? Please add your comments below!